vSAN Health Service - Network Health - RDMA Configuration Health

Article ID: 326624

Updated On:

Products

VMware vSAN

Issue/Introduction

This article explains the Network Health – RDMA configuration health in the vSAN Health Service in vSAN and provides details on why it might report an error.

Environment

VMware vSAN 7.0.x

Resolution

Q: What does the Network Health – RDMA configuration health do?

vSAN 7.0 Update 2 and later supports Remote Direct Memory Access (RDMA) communication. RDMA allows direct memory access from the memory of one computer to the memory of another computer without involving the operating system or CPU. The transfer of memory is offloaded to the RDMA-capable Host Channel Adapters (HCA).Since vSAN supports the RoCE v2 protocol which requires a network configured for lossless operation, all vSAN data nodes in the cluster must satisfy the following conditions in order to use RDMA in vSAN, which will be covered by this health check.

- Each vSAN data node must have an active vSAN certified RDMA-capable NIC configured as vSAN uplink.

- Each vSAN data node must be connected via Lossless Layer 2 Network. To ensure lossless layer 2 environment, the Data Center Bridging (DCB) mode must be configured as IEEE and the Priority Flow Control (PFC) value must be set to 3. (see Step 3 below)

Q: What does it mean when it is in an error state?

If the check reports an error, it means there is RDMA configuration issue for one or more hosts so that the vSAN couldn't use RDMA for data communication and the entire vSAN cluster will switch to TCP.Q: How does one troubleshoot and fix the error state?

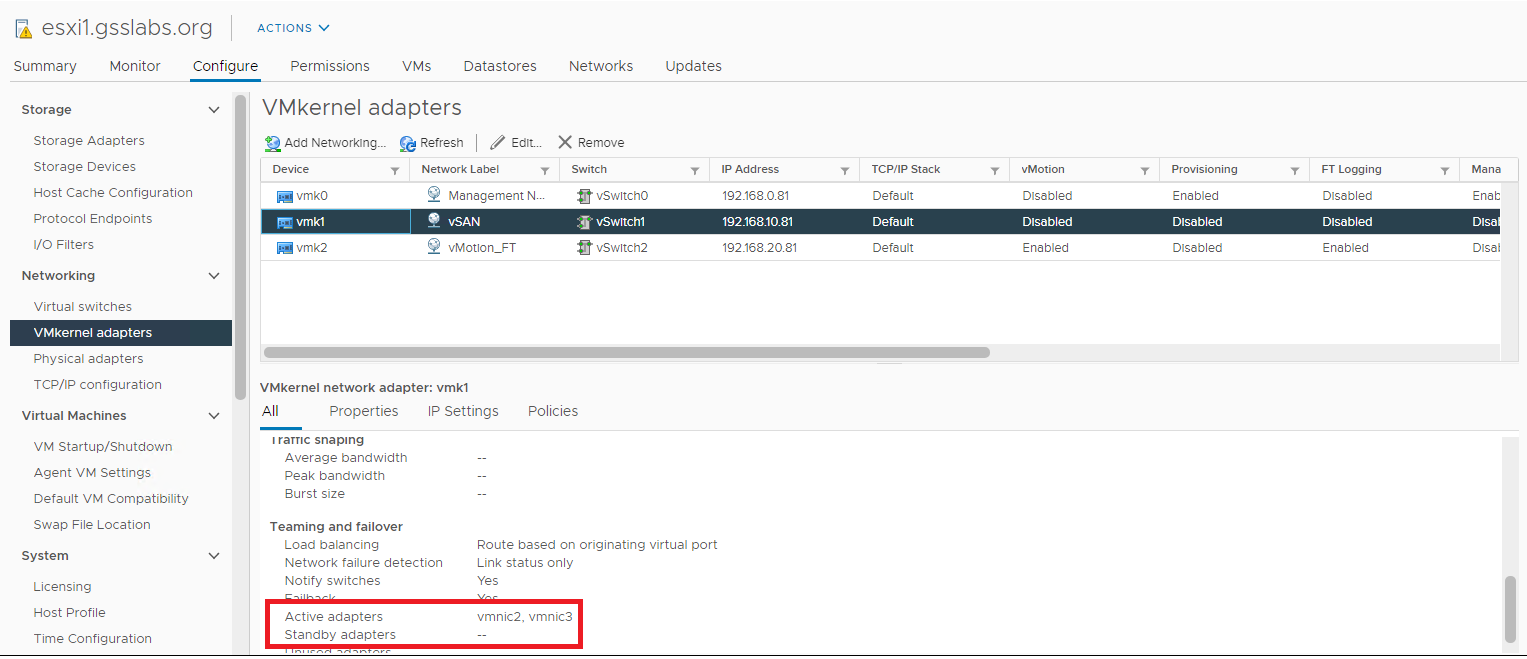

To troubleshoot the RDMA configuration issues, please follow the below steps:- Find out the vSAN uplinks either from vCenter or ESXi command line

a) vCenter

b) ESXi command line

- Run esxcli vsan network list

esxcli vsan network list

Interface

VmkNic Name: vmk1

IP Protocol: IP

Interface UUID: 076d895f-1abe-a4e4-b1a9-0050560181d5

Agent Group Multicast Address: 224.2.3.4

Agent Group IPv6 Multicast Address: ff19::2:3:4

Agent Group Multicast Port: 23451

Master Group Multicast Address: 224.1.2.3

Master Group IPv6 Multicast Address: ff19::1:2:3

Master Group Multicast Port: 12345

Host Unicast Channel Bound Port: 12321

Data-in-Transit Encryption Key Exchange Port: 0

Multicast TTL: 5

Traffic Type: vsan

Interface

VmkNic Name: vmk1

IP Protocol: IP

Interface UUID: 076d895f-1abe-a4e4-b1a9-0050560181d5

Agent Group Multicast Address: 224.2.3.4

Agent Group IPv6 Multicast Address: ff19::2:3:4

Agent Group Multicast Port: 23451

Master Group Multicast Address: 224.1.2.3

Master Group IPv6 Multicast Address: ff19::1:2:3

Master Group Multicast Port: 12345

Host Unicast Channel Bound Port: 12321

Data-in-Transit Encryption Key Exchange Port: 0

Multicast TTL: 5

Traffic Type: vsan

- Run esxtop then type n for networking

- Verify RDMA support for vSAN uplinks for each of vSAN data node with the command below:

root@w1-vsan-esx385:~] esxcli rdma device list

Name Driver State MTU Speed Paired Uplink Description

------- ---------- ------ ---- ------- ------------- -----------

vmrdma2 nmlx5_rdma Active 1024 25 Gbps vmnic0 MT27710 Family [ConnectX-4 Lx]

vmrdma3 nmlx5_rdma Active 1024 25 Gbps vmnic1 MT27710 Family [ConnectX-4 Lx]

Name Driver State MTU Speed Paired Uplink Description

------- ---------- ------ ---- ------- ------------- -----------

vmrdma2 nmlx5_rdma Active 1024 25 Gbps vmnic0 MT27710 Family [ConnectX-4 Lx]

vmrdma3 nmlx5_rdma Active 1024 25 Gbps vmnic1 MT27710 Family [ConnectX-4 Lx]

- Check DCB settings on the associated uplink with the command below:

[root@w1-vsan-esx385:~] esxcli network nic dcb status get -n vmnic0

Nic Name: vmnic0

Mode: 3 - IEEE Mode "<-- To ensure lossless layer 2 environment"

Enabled: true

Capabilities:

Priority Group: true

Priority Flow Control: true

PG Traffic Classes: 8

PFC Traffic Classes: 8

PFC Enabled: true

PFC Configuration: 0 0 0 1 0 0 0 0

IEEE ETS Configuration:

Willing Bit In ETS Config TLV: 0

Supported Capacity: 8

Credit Based Shaper ETS Algorithm Supported: 0x0

TX Bandwidth Per TC: 12 13 12 13 12 13 12 13

RX Bandwidth Per TC: 12 13 12 13 12 13 12 13

TSA Assignment Table Per TC: 2 2 2 2 2 2 2 2

Priority Assignment Per TC: 0 1 2 3 4 5 6 7

Recommended TC Bandwidth Per TC: 12 13 12 13 12 13 12 13

Recommended TSA Assignment Per TC: 2 2 2 2 2 2 2 2

Recommended Priority Assignment Per TC: 0 1 2 3 4 5 6 7

IEEE PFC Configuration:

Number Of Traffic Classes: 8

PFC Configuration: 0 0 0 1 0 0 0 0

Macsec Bypass Capability Is Enabled: 0

Round Trip Propagation Delay Of Link: 0

Sent PFC Frames: 0 0 0 0 0 0 0 0

Received PFC Frames: 0 0 0 0 0 0 0 0

Nic Name: vmnic0

Mode: 3 - IEEE Mode "<-- To ensure lossless layer 2 environment"

Enabled: true

Capabilities:

Priority Group: true

Priority Flow Control: true

PG Traffic Classes: 8

PFC Traffic Classes: 8

PFC Enabled: true

PFC Configuration: 0 0 0 1 0 0 0 0

IEEE ETS Configuration:

Willing Bit In ETS Config TLV: 0

Supported Capacity: 8

Credit Based Shaper ETS Algorithm Supported: 0x0

TX Bandwidth Per TC: 12 13 12 13 12 13 12 13

RX Bandwidth Per TC: 12 13 12 13 12 13 12 13

TSA Assignment Table Per TC: 2 2 2 2 2 2 2 2

Priority Assignment Per TC: 0 1 2 3 4 5 6 7

Recommended TC Bandwidth Per TC: 12 13 12 13 12 13 12 13

Recommended TSA Assignment Per TC: 2 2 2 2 2 2 2 2

Recommended Priority Assignment Per TC: 0 1 2 3 4 5 6 7

IEEE PFC Configuration:

Number Of Traffic Classes: 8

PFC Configuration: 0 0 0 1 0 0 0 0

Macsec Bypass Capability Is Enabled: 0

Round Trip Propagation Delay Of Link: 0

Sent PFC Frames: 0 0 0 0 0 0 0 0

Received PFC Frames: 0 0 0 0 0 0 0 0

Feedback

Yes

No